Insights in 'Designing Data-Intensive Applications' - Part 2/3

A post to share key insights after reading Martin Kleppmann's essential book. Part 2/3

Welcome to my blog on the book "Designing Data Intensive Applications" by Martin Kleppmann. In this post, I will share with you key principles, trade-offs, and technologies that I found as the most interesting for building robust and scalable data systems.

Most of these notes are as they appear in the original book, or tailored by me, but all ideas are a creation of Martin or authors Martin quotes in his book.

This is Part 2. Check Part 1, Foundations of Data Systems, here, and Part 3, Derived Data, here.

- Distributed Data

Distributed Data

In the first part, we visited aspects of data systems that apply when data is stored on a single machine. For this second part, we move up a level and ask: what happens if multiple machines are involved in storage and retrieval of data?

Intro

Why should I distribute?

-

Scalability If your data volume, read load, or write load grows bigger than a single machine can handle, you could spread the load across multiple machines.

-

Fault tolerance/High Availability If your application needs to continue working even if one machine (or several machines, or the network, or an entire datacenter) goes down, you can use multiple machines to give you redundancy.

-

Latency If you have users around the world, you might want to have servers at various locations worldwide so that each user can be served from a datacenter that is geographically close to them (think about Netflix).

Scaling to Higher Load

If all you need is to scale to higher load, the simplest approach is to buy a more powerful machine (vertical scaling or scaling up). The problem with a shared-memory approach is that the cost grows faster than linearly.

Shared-Nothing Architectures

Also called horizontal scaling or scaling out, this approach makes each node use its CPUs, RAM, and disks independently. Any coordination done at the software level, using a conventional network.

No special hardware is required, so you can use whatever machines have the best price/performance ratio. You can potentially distribute data across multiple geographic regions, and thus reduce latency for users and potentially be able to survive the loss of an entire datacenter.

Shared-nothing architecture also implies additional complexity for applications and sometimes limits the expressiveness of the data models you can use.

5. Replication

It offers:

- high availability: keeping the system running if one machines goes down

- allowing an application to continue working when there is a network interruption

- latency: placing data geographically close to users, for faster interaction

- scalability: performing reads on replicas

Different from Partitioning

Partitioning means splitting a big database into smaller subsets called partitions so that different partitions can be assigned to different nodes (also known as sharding).

Following the leader

Every write to the database needs to be processed by every replica; otherwise, the replicas would no longer contain the same data. The most common solution for this is called leader-based replication (also known as active/passive or master–slave). Whenever the leader writes new data to its local storage, it also sends the data change to all of its followers as part of a replication log or change stream. Each follower takes the log from the leader and updates its local copy of the database accordingly, by applying all writes in the same order as they were processed on the leader.

How many leaders? Yes…

There are three main approaches:

-

Single-leader replication Clients send all writes to a single node (the leader), which sends a stream of data change events to the other replicas (followers). Reads can be performed on any replica, but reads from followers might be stale. No conflicts! Great for read-heavy workloads. Only makes sense with asynchronous replication, which in turn translates into temporal outdated data (eventual consistency).

-

Multi-leader replication Clients send each write to one of several leader nodes, any of which can accept writes. The leaders send streams of data change events to each other and to any follower nodes.

-

Leaderless replication Clients send each write to several nodes, and read from several nodes in parallel in order to detect and correct nodes with stale data. The client may get different responses from different nodes. Version numbers are used to determine which value is newer.

Multi-leader and leaderless replication can be more robust in the presence of faulty nodes, network interruptions, and latency spikes—at the cost of being harder to reason about (concurrency allows for conflicts) and providing only very weak consistency guarantees. If you want synchronous conflict detection, you might as well just use single-leader replication.

Sync or Async? Semi!

If a synchronous follower becomes unavailable or slow, one of the asynchronous followers is made synchronous. This guarantees that you have an up-to-date copy of the data on at least two nodes: the leader and one synchronous follower. This configuration is sometimes also called semi-synchronous.

Although asynchronous replication can be fast when the system is running smoothly, it’s important to figure out what happens when replication lag increases and servers fail. If a leader fails and you promote an asynchronously updated follower to be the new leader, recently committed data may be lost.

Durability vs Write Throughput

If the leader fails and is not recoverable, any writes that have not yet been replicated to followers are lost. This means that a write is not guaranteed to be durable, even if it has been confirmed to the client. However, a fully asynchronous configuration has the advantage that the leader can continue processing writes, even if all of its followers have fallen behind.

Weakening durability may sound like a bad trade-off, but asynchronous replication is nevertheless widely used, especially if there are many followers or if they are geographically distributed.

We lost the Leader: Failover

Handling a failure of the leader is trickier: one of the followers needs to be promoted to be the new leader, clients need to be reconfigured to send their writes to the new leader, and the other followers need to start consuming data changes from the new leader.

Discarding writes is especially dangerous if other storage systems outside of the database need to be coordinated with the database contents. For example, in one incident at GitHub, an out-of-date MySQL follower was promoted to leader. The database used an auto-incrementing counter to assign primary keys to new rows, but because the new leader’s counter lagged behind the old leader’s, it reused some primary keys that were previously assigned by the old leader. These primary keys were also used in a Redis store, so the reuse of primary keys resulted in inconsistency between MySQL and Redis, which caused some private data to be disclosed to the wrong users.

Problems with Replication

In the move to distributed (replicated and partitioned) databases, many systems have abandoned transactions, claiming that they are too expensive in terms of performance and availability, and asserting that eventual consistency is inevitable in a scalable system.

A few consistency models helpful for deciding how an application should behave under replication lag:

-

Read-after-write consistency: read your own writes Users should always see data that they submitted themselves. Always read the user’s own profile from the leader, and any other users’ profiles from a follower. Monitor the replication lag on followers and prevent queries on any follower that is more than one minute behind the leader.

-

Monotonic reads Make sure that each user always makes their reads from the same replica. For example, the replica can be chosen based on a hash of the user ID. However, if that replica fails, the user’s queries will need to be rerouted to another replica.

-

Consistent prefix reads Users should see the data in a state that makes causal sense: for example, seeing a question and its reply in the correct order. One solution is to make sure that any writes that are causally related to each other are written to the same partition—but in some applications that cannot be done efficiently.

happens-before relationship: whenever you have two operations A and B, there are three possibilities: either A happened before B, or B happened before A, or A and B are concurrent.

Amazin' conflict

For some time, the conflict resolution logic on the Amazon shopping cart would preserve items added to the cart, but not items removed from the cart. Thus, customers would sometimes see items reappearing in their carts even though they had previously been removed.

6. Partitioning

When you have so much data that storing and processing it on a single machine is no longer feasible, you can partition it to spread the data and query load evenly across multiple machines, avoiding hot nodes (scalability).

Approaches

- Key range partitioning, where sorting keys has the advantage that efficient range queries are possible. Partitions are typically rebalanced dynamically by splitting the range into two subranges when a partition gets too big (against hot ranges)

- Hash partitioning, where a hash function is applied to each key, and a partition owns a range of hashes. Range queries become inefficient, but may distribute load more evenly.

- Hybrid, with a compound key: using one part of the key to identify the partition and another part for the sort order.

Consistent Hashing

It is a way of evenly distributing load across an internet-wide system of caches such as a CDN. It uses randomly chosen partition boundaries to avoid the need for central control or distributed consensus. Note that consistent here has nothing to do with replica consistency or ACID consistency, but rather describes a particular approach to rebalancing.

This particular approach actually doesn’t work very well for databases, so it is rarely used in practice. Because this is so confusing, it’s best to avoid the term consistent hashing and just call it hash partitioning instead.

Secondary indexes

A secondary index can be partitioned in two ways:

- Document-partitioned (local), where the secondary indexes are stored in the same partition as the primary key and value. This means that only a single partition needs to be updated on write, but a read of the secondary index requires a scatter/gather across all partitions.

- Term-partitioned (global), where the secondary indexes are partitioned separately, using the indexed values. An entry in the secondary index may include records from all partitions of the primary key. When a document is written, several partitions of the secondary index need to be updated; however, a read can be served from a single partition.

Amazon DynamoDB states that its global secondary indexes are updated (asynchronously) within a fraction of a second in normal circumstances.

Rebalancing

- Data storage, read and write requests (the “load”) should be shared fairly between nodes.

- While rebalancing is happening, the database should continue accepting reads and writes.

- No more data than necessary should be moved between nodes.

How not to do it: hash mod N

If the number of nodes N changes, most of the keys will need to be moved from one node to another. For example, say hash(key) = 123456. If you initially have 10 nodes, that key starts out on node 6 (because 123456 mod 10 = 6). When you grow to 11 nodes, the key needs to move to node 3 (123456 mod 11 = 3), and when you grow to 12 nodes, it needs to move to node 0 (123456 mod 12 = 0). Such frequent moves make rebalancing excessively expensive.

A better way is to do dynamic partitioning. If there is only a small amount of data, a small number of partitions is sufficient, so overheads are small; if there is a huge amount of data, the size of each individual partition is limited to a configurable maximum.

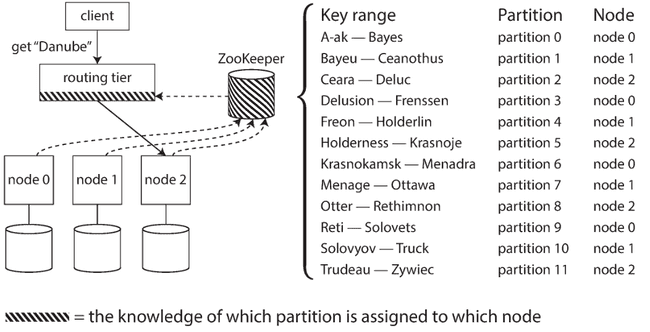

Routing queries to the appropriate partition

How does the component making the routing decision (which may be one of the nodes, the routing tier, or the client) learn about changes in the assignment of partitions to nodes? This is a challenging problem, because it is important that all participants agree—otherwise requests would be sent to the wrong nodes and not handled correctly. Many distributed data systems rely on a separate coordination service such as ZooKeeper.

7. Transactions

Transactions are an abstraction layer that allows an application to pretend that certain concurrency problems and certain kinds of hardware and software faults don’t exist.

Conceptually, all the reads and writes in a transaction are executed as one operation: either the entire transaction succeeds (commit) or it fails (abort, rollback). If it fails, the application can safely retry.

Not every application needs transactions, and sometimes there are advantages to weakening transactional guarantees or abandoning them entirely (for example, to achieve higher performance or higher availability).

For complex access patterns, transactions can hugely reduce the number of potential error cases you need to think about. Without transactions, various error scenarios (processes crashing, network interruptions, power outages, disk full, unexpected concurrency, etc.) mean that data can become inconsistent in various ways.

Isolation Levels and Famous Race Conditions

Read Committed

The most basic level of transaction isolation. It makes two guarantees:

- When reading from the database, you will only see data that has been committed (no dirty reads).

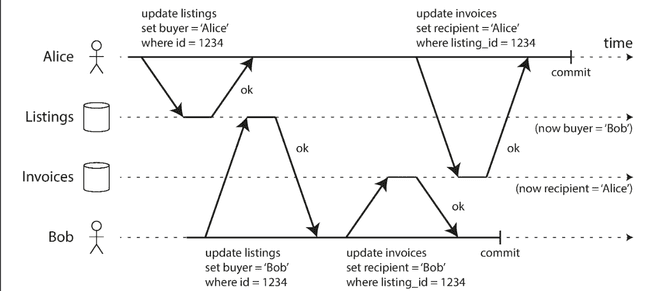

- When writing to the database, you will only overwrite data that has been committed (no dirty writes).

Dirty reads One client reads another client’s writes before they have been committed.

Dirty writes One client overwrites data that another client has written, but not yet committed.

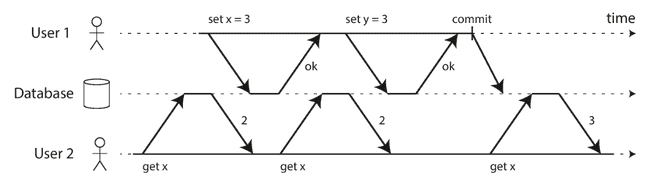

Snapshot Isolation/Repeatable Read

There are still plenty of ways in which you can have concurrency bugs even with read committed isolation.

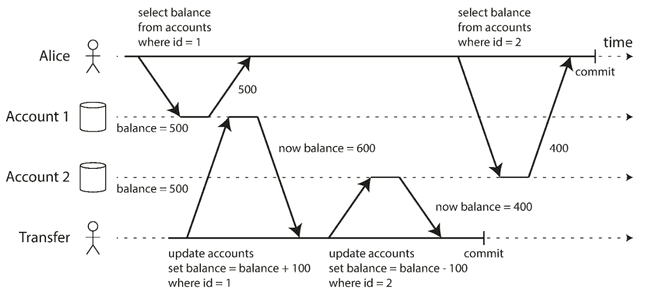

Read skew/Non-repeatable reads A client sees different parts of the database at different points in time. This issue is most commonly prevented with snapshot isolation, which allows a transaction to read from a consistent snapshot corresponding to data committed in one particular point in time.

Serializable isolation

The read committed and snapshot isolation levels are about the guarantees of what a read-only transaction can see in the presence of concurrent writes. What kind of conflicts can occur between concurrently writing transactions?

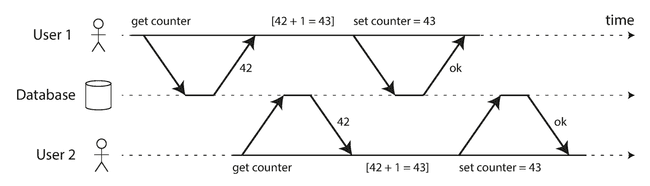

Lost updates Two clients concurrently perform a read-modify-write cycle. One overwrites the other’s write without incorporating its changes, so data is lost.

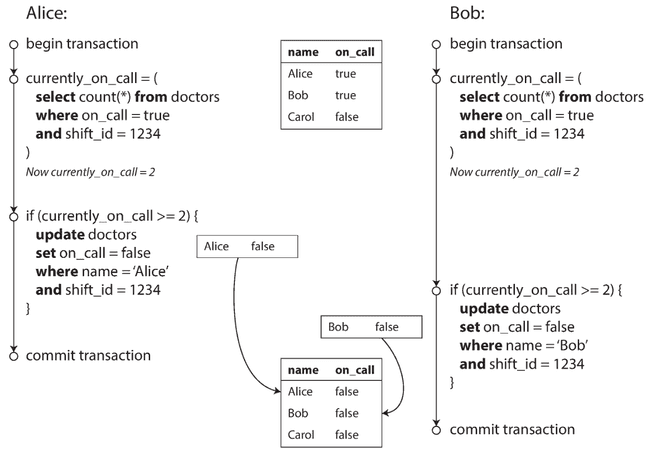

Write skew A transaction reads something, makes a decision based on the value it saw, and writes the decision to the database. However, by the time the write is made, the premise of the decision is no longer true.

Another example around preventing double-spending: a service that allows users to spend money or points needs to check that a user doesn’t spend more than they have. You might implement this by inserting a tentative spending item into a user’s account, listing all the items in the account, and checking that the sum is positive. With write skew, it could happen that two spending items are inserted concurrently that together cause the balance to go negative, but that neither transaction notices the other.

Phantom reads A transaction reads objects that match some search condition. Another client makes a write that affects the results of that search. Snapshot isolation prevents straightforward phantom reads, but phantoms in the context of write skew require special treatment, such as index-range locks.

Serializable isolation

Usually regarded as the strongest isolation level. It guarantees that even though transactions may execute in parallel, the end result is the same as if they had executed serially, thus, preventing race conditions.

But if serializable isolation is so much better than the mess of weak isolation levels, why isn’t everyone using it?! Most databases that provide serializability today use one of three techniques.

Literally executing transactions in a serial order

- Every transaction must be small and fast, because it takes only one slow transaction to stall all transaction processing.

- Whenever the dataset can fit in memory.

- Write throughput must be low enough to be handled on a single CPU core

Two-phase locking (2PL) Several transactions are allowed to concurrently read the same object as long as nobody is writing to it. But as soon as anyone wants to modify or delete an object, exclusive access is required. In 2PL, writers don’t just block other writers; they also block readers and vice versa: it's pessimistic.

- If transaction A has read an object and transaction B wants to write to that object, B must wait until A commits or aborts before it can continue. (This ensures that B can’t change the object unexpectedly behind A’s back.)

- If transaction A has written an object and transaction B wants to read that object, B must wait until A commits or aborts before it can continue.

Serializable snapshot isolation (SSI) On the one hand, we have implementations of serializability that don’t perform well (two-phase locking) or don’t scale well (serial execution). On the other hand, we have weak isolation levels that have good performance, but are prone to various race conditions (lost updates, write skew, phantoms, etc.).

A fairly new algorithm (SSI) avoids most of the downsides of the previous approaches. It uses an optimistic approach, allowing transactions to proceed without blocking. When a transaction wants to commit, it is checked, and it is aborted if the execution was not serializable.

Snapshot isolation has the mantra "readers never block writers", and "writers never block readers", which captures this key difference between snapshot isolation and two-phase locking. Because 2PL provides serializability, it protects against all the race conditions, including lost updates and write skew.

If the database keeps track of each transaction’s activity in great detail, it can be precise about which transactions need to abort, but the bookkeeping overhead can become significant. Less detailed tracking is faster, but may lead to more transactions being aborted than strictly necessary.

The rate of aborts significantly affects the overall performance of SSI. For example, a transaction that reads and writes data over a long period of time is likely to run into conflicts and abort, so SSI requires that read-write transactions be fairly short (long-running read-only transactions may be okay). However, SSI is probably less sensitive to slow transactions than two-phase locking or serial execution.

8. The Trouble with Distributed Systems

In the end, our task as engineers is to build systems that meet user expectations, in spite of everything that goes wrong in the real world. In distributed systems, pessimism pays off, because plenty of things can go wrong:

Nondeterminism and Partial Failures

- Ethernet and IP are packet-switched protocols, which suffer from queueing and thus unbounded delays in the network.

- Clocks in nodes might be out of sync, suddenly jumping in time.

- A node in a distributed system must assume that its execution can be paused (i.e. garbage collection) for a significant length of time at any point.

Such partial failures are inherent to distributed systems, and as such, nondeterministic. When software interacts with other nodes, there's a possibility of occasional failures, slow responses, or timeouts. Tolerance of partial failures is a goal, enabling the system to function despite broken parts.

Still, a network doesn’t guard against human error (e.g. misconfigured switches), which is a major cause of outages.

The bigger a system gets, the more likely it is that one of its components is broken. Over time, broken things get fixed and new things break, but in a system with thousands of nodes, it is reasonable to assume that something is always broken.

Detecting faults

Timeouts are often used, but they can't distinguish between network and node failures. Even once detected, tolerating faults is complex due to the lack of shared state between nodes. Information flows via an unreliable network, necessitating protocols that involve multiple nodes to reach agreement.

Rather than using configured constant timeouts, systems can continually measure response times and their variability (jitter), and automatically adjust timeouts according to the observed response time distribution.

With careful use of quality of service (prioritization and scheduling of packets) and admission control (rate-limiting senders), it is possible to provide statistically bounded delay.

Deliberately trigger network problems and test the system’s response (this is the idea behind Netflix's Chaos Monkey).

TCP vs UDP

Some latency-sensitive applications, such as videoconferencing, use UDP rather than TCP. It’s a trade-off between reliability and variability of delays: as UDP does not perform flow control and does not retransmit lost packets, it avoids some of the reasons for variable network delays.

Time is Money

The "MiFID II" draft European regulation for financial institutions requires all high-frequency trading funds to synchronize their clocks to within 100 microseconds of UTC, in order to help debug market anomalies such as “flash crashes” and to help detect market manipulation.

The Worst Error

Database writes can mysteriously disappear: a node with a lagging clock is unable to overwrite values previously written by a node with a fast clock until the clock skew between the nodes has elapsed. This scenario can cause arbitrary amounts of data to be silently dropped without any error being reported to the application.

In real life it's extremely difficult to calculate the true, current time. A close exception is Google’s TrueTime API, which explicitly reports the confidence interval on the local clock. When you ask it for the current time, you get back two values: [earliest, latest], which are the earliest possible and the latest possible timestamp.

Hard real-time systems

Computers that control aircraft, rockets, or cars must respond quickly to their sensor inputs. There is a specified deadline by which the software must respond; if it doesn’t, that may cause a failure of the entire system. Moreover, “real-time” is not the same as “high-performance”—in fact, real-time systems may have lower throughput, since they have to prioritize timely responses above all else.

Cost Trade-Off

You can always have a cheaper system that’s further from the ideal of a distributed system: scalable, fault tolerant, and with low latency. It depends on your situation if you choose cheap and unreliable solutions over expensive reliability.

There is no such thing as perfect reliability, but only limits of what one can realistically promise.

The internet shares network bandwidth dynamically. Senders want to act as quickly as possible, and the network switches allocates bandwidth for each packet over the wire. This approach has the downside of queueing, but the advantage is that it maximizes utilization of the wire. The wire has a fixed cost, so if you utilize it better, each byte you send over the wire is cheaper.

Multi-tenancy environments with dynamic resource partitioning provide better utilization, so it is cheaper, but it has the downside of variable delays (vs single-tenancy environments with less delay jitter).

Variable delays in networks are not a law of nature, but simply the result of a cost/benefit trade-off.

Knowledge, Truth and Lies

A service’s clients are often run by people whose priorities are very different from the priorities of the people running the service. I have been both a victim and a perpetrator of this while working for a Big Tech firm, where interests are always at conflict.

The moral of the story is that a node cannot necessarily trust its own judgment of a situation.

Byzantine Faults

Distributed systems problems become much harder if there is a risk that nodes may “lie” (send arbitrary faulty or corrupted responses)—for example, if a node may claim to have received a particular message when in fact it didn’t. Such behavior is known as a Byzantine fault.

The Byzantine Generals Problem

This problem is a generalization of the so-called Two Generals Problem, which imagines a situation in which two army generals need to agree on a battle plan. As they have set up camp on two different sites, they can only communicate by messenger, and the messenger sometimes get delayed or lost (like packets in a network).

A system is Byzantine fault-tolerant if it continues to operate correctly even if some of the nodes are malfunctioning and not obeying the protocol, or if malicious attackers are interfering with the network.

In peer-to-peer networks, where there is no such central authority, Byzantine fault tolerance is more relevant. Think of the blockchain, for example.

The theoretical description of an algorithm can declare that certain things are simply assumed not to happen—and in non-Byzantine systems, we do have to make some assumptions about faults that can and cannot happen. However, a real implementation may still have to include code to handle the case where something happens that was assumed to be impossible, even if that handling boils down to printf("Sucks to be you") and exit(666)—i.e., letting a human operator clean up the mess. (This is arguably the difference between computer science and software engineering.)

9. Consistency and Consensus

Packets can be lost, reordered, duplicated, or arbitrarily delayed in the network; clocks are approximate at best; and nodes can pause due to garbage collection or crash at any time. We need to find ways of tolerating faults in a distributed system.

The best way of building fault-tolerant systems is to find some general-purpose abstractions with useful guarantees. For example, by using a transaction, the application can pretend that there are no crashes (atomicity), that nobody else is concurrently accessing the database (isolation), and that storage devices are perfectly reliable (durability). Even though crashes, race conditions, and disk failures do occur, the transaction abstraction hides those problems so that the application doesn’t need to worry about them.

Consensus

When users register for a new social network, how can one ensure that a username is unique? If one node is going to accept a registration, it needs to somehow know that another node isn’t concurrently in the process of registering the same name. This problem leads us toward another useful transaction: consensus.

Achieving consensus means deciding something in such a way that all nodes agree on what was decided, and such that the decision is irrevocable.

Split brain 🧠

Say you have a database with single-leader replication. If the leader dies and you need to fail over to another node, the remaining database nodes can use consensus to elect a new leader. If two nodes both believe that they are the leader, we have a split brain, and it often leads to data loss.

Consistency guarantees

Most replicated databases provide at least eventual consistency (if you stop writing and wait some time, then eventually all reads will return the same value). Stronger guarantees may have worse performance or be less fault-tolerant than systems with weaker guarantees.

For example, linearizability is appealing because it makes a database behave like a variable in a single-threaded program, but it’s nevertheless slow, especially with large network delays.

Causality

Unlike linearizability, which puts all operations in a single, totally ordered timeline, causality provides us with a weaker consistency model: some things can be concurrent, so the version history is like a timeline with branching and merging. Causal consistency does not have the coordination overhead of linearizability and is much less sensitive to network problems.

Kicking the can down the road 🥫

Although a single-leader database can provide linearizability without executing a consensus algorithm on every write, it still requires consensus to maintain its leadership and for leadership changes.

Tools like ZooKeeper play an important role in providing an “outsourced” consensus, failure detection, and membership service that applications can use.

Nevertheless, not every system necessarily requires consensus: for example, leaderless and multi-leader replication systems typically do not use global consensus. The conflicts that occur in these systems are a consequence of not having consensus across different leaders, but maybe that’s okay: maybe we simply need to cope without linearizability and learn to work better with data that has branching and merging version histories.

Comments

Thank you for your comment! Under review for moderation.