Insights in 'Designing Data-Intensive Applications' - Part 1/3

A post to share key insights after reading Martin Kleppmann's essential book. Part 1/3

Welcome to my blog on the book "Designing Data Intensive Applications" by Martin Kleppmann. In this post, I will share with you key principles, trade-offs, and technologies that I found as the most interesting for building robust and scalable data systems.

Reading it is no easy feat (>600 pages), but I do strongly recommend it if you want to have a broader vision for the next time you have to design a system, or just want to brush up on some concepts.

Some of these notes are as they appear in the original book, most tailored by me, but all ideas are a creation of Martin or authors Martin quotes in his book.

This is Part 1. Check Part 2, Distributed Data, here, and Part 3, Derived Data, here.

- Foundations of Data Systems

Who is this for? Why should I read this?

The book and this blog are for software engineers, architects, and managers who love to code and design systems. It is especially relevant if you need to make decisions about the architecture of the systems you work on. Even if you have no choice over your tools, I hope this blog will help you understand their strengths and weaknesses, just as the book did for me.

As software engineers and architects, we need to have a technically accurate and precise understanding of the various technologies and their trade-offs if we want to build good applications. Often times, aspects of a system are looked over or outright dismissed, such as message queues, caches, search indexes, frameworks for batch and stream processing.

If you understand those principles, you’re in a position to see where each tool fits in, how to make good use of it, and how to avoid its pitfalls. That’s where Kleppmann's book comes in, and that's what I want to highlight in this post.

Foundations of Data Systems

Preface

What is data-intensive anyway?

Is it yet another buzz phrase to add traction for lame LinkedIn posts? Maybe. But in essence, an application is data-intensive if data is its primary challenge—the quantity of data, the complexity of data, or the speed at which it is changing—as opposed to compute-intensive, where CPU cycles are the bottleneck.

A data-intensive application is typically built from standard building blocks that provide commonly needed functionality. For example, many applications need to:

- Store data for later (databases)

- Remember the result of an expensive operation, to speed up reads (caches)

- Allow users to search data by keyword or filter it in various ways (search indexes

- Periodically crunch a large amount of accumulated data (batch processing)

- Send a message to another process, to be handled asynchronously (stream processing)

Show me the money (and the system)

Businesses need to be agile, test hypotheses cheaply, and respond quickly to new market insights by keeping development cycles short and data models flexible. No matter how strong your business plan looks, without a proper system that fits this ideal, there is little chance that startup or company will prosper.

"You’re not Google or Amazon. Stop worrying about scale!"

Building for scale that you don’t need is wasted effort and may lock you into an inflexible design. In effect, it is a form of premature optimization. However, it’s also important to choose the right tool for the job, and different technologies each have their own strengths and weaknesses.

1. Reliable, Scalable, and Maintainable Applications

An application has to meet various requirements in order to be useful. There are functional requirements (what it should do, such as allowing data to be stored, retrieved, searched, and processed in various ways), and some nonfunctional requirements (general properties like security, reliability, compliance, scalability, compatibility, and maintainability). Let's discuss the latter.

Reliability

A system is reliable if it continues to perform the correct function users expect at the desired level of performance, even in the face of adversity (hardware or software faults, users making mistakes or using the software in unexpected ways).

There are situations in which we may choose to sacrifice reliability in order to reduce development cost (e.g., when developing a prototype product for an unproven market) or operational cost (e.g., for a service with a very narrow profit margin)—but we should be very conscious of when we are cutting corners.

Scalability

A system is scalable if its performance stays ‘good enough’ under growth in data volume, traffic volume, or complexity.

In an unproven product it’s usually more important to be able to iterate quickly on product features than it is to scale to some hypothetical future load.

The architecture of systems that operate at large scale is usually highly specific to the application—there is no such thing as a one-size-fits-all scalable architecture. The problem may be the volume of reads, the volume of writes, the volume of data to store, the complexity of the data, the response time requirements, the access patterns, or (usually) some mixture of all of these plus many more issues.

Some systems can automatically add computing resources when they detect a load increase, whereas other systems are scaled manually by a human.

An architecture that is appropriate for one level of load is unlikely to cope with 10 times that load. If you are working on a fast-growing service, it is therefore likely that you will need to rethink your architecture on every order of magnitude load increase.

Maintainability

A system is maintainable if, over time, many different people will work on the system, and they are all able to work on it productively. In essence it’s about making life better for the engineering and operations teams who need to work with the system:

- self-healing where appropriate, but also giving administrators manual control

- good documentation and an easy-to-understand operational model

It is well known that the majority of the cost of software is not in its initial development, but in its ongoing maintenance.

Maintainability can be divided into three design principles: Operability: it should be easy to…

- Keep the system running smoothly

- Use monitoring for tracking down the cause of problems

- Make security patches

- Deploy and rollback

- Migrate applications

- Preserve the organization's knowledge (often looked over)

- Provide visibility into the runtime behavior

- Avoiding dependency on individual machines

- Plan the expected capacity of your systems, and monitor for changes

Good operations can often work around the limitations of bad (or incomplete) software, but good software cannot run reliably with bad operations.

Simplicity

- Make it easy for new engineers to understand the system, by removing as much complexity as possible from the system. (This is not the same as simplicity of the user interface).

- Complexity slows down everyone who needs to work on the system, further increasing the cost of maintenance.

Evolvability

- Make it easy for engineers to make changes to the system in the future.

A tool for the task

Many new tools for data storage and processing have emerged in recent years. They are optimized for a variety of different use cases, and they no longer neatly fit into traditional categories. For example, there are data stores that are also used as message queues (Redis), and there are message queues with database-like durability guarantees (Apache Kafka).

This ambiguity can work for specific use cases, but generally makes the choice of a tool harder and sticks your system to the tools limits, opposite to the more flexible approach, where the work is broken down into tasks that can be performed efficiently on a single tool, and those different tools are stitched together using application code.

Faults and failures

A fault is usually defined as one component of the system deviating from its spec, whereas a failure is when the system as a whole stops providing the required service to the user.

Faults can be in hardware (typically random and uncorrelated), software (bugs are typically systematic and hard to deal with), and humans (who inevitably make mistakes from time to time). Fault-tolerance techniques can hide certain types of faults from the end user

Hard disks are reported as having a mean time to failure (MTTF) of about 10 to 50 years. On a storage cluster with 10,000 disks, we should expect on average one disk to die per day.

It is impossible to reduce the probability of a fault to zero; it is usually best to design fault-tolerance mechanisms that prevent faults from causing failures. Failures of e-commerce sites can have huge costs in terms of lost revenue and damage to reputation (I was involved in a crash that cost my employer $40K in only 3 hours).

It is a common practice in big tech companies, such as Netflix, Amazon or MercadoLibre, to deliberately induce faults, so as to ensure that the fault-tolerance machinery is continually exercised and tested. A famous one is Netflix's Chaos Monkey.

Such fault-tolerant systems also have operational advantages: a single-server system requires planned downtime if you need to reboot the machine (to apply operating system security patches, for example), whereas a system that can tolerate machine failure can be patched one node at a time, without downtime of the entire system.

If a system is expected to provide some guarantee (for example, in a message queue, that the number of incoming messages equals the number of outgoing messages), it can constantly check itself while it is running and raise an alert if a discrepancy is found.

Expect the best, prepare for the worst

-

Design systems in a way that minimizes opportunities for error. For example, well-designed abstractions, APIs, and admin interfaces make it easy to do “the right thing” and discourage “the wrong thing.” However, if the interfaces are too restrictive people will work around them, negating their benefit, so this is a tricky balance to get right.

-

Provide fully featured non-production sandbox environments where people can explore and experiment safely, using real data, without affecting real users.

-

Set up detailed and clear monitoring, such as performance metrics and error rates. When a problem occurs, metrics can be invaluable in diagnosing the issue: requests per second to a web server, the ratio of reads to writes in a database, the number of simultaneously active users in a chat room, the hit rate on a cache, or something else.

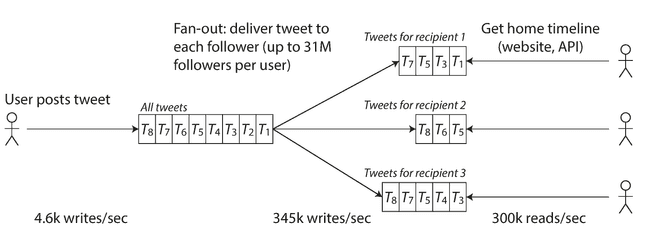

Twitter example

- Post tweet: a user can publish a new message to their followers (4.6k requests/sec on average, over 12k requests/sec at peak).

- Home timeline: a user can view tweets posted by the people they follow (300k requests/sec).

Twitter’s scaling challenge is not primarily due to tweet volume, but due to fan-out: each user follows many people, and each user is followed by many people. When a user posts a tweet, the system has to look up all the people who follow that user, and insert the new tweet into each of their home timeline caches. The request to read the home timeline is then cheap, because its result has been computed ahead of time.

This works better because the average rate of published tweets is almost two orders of magnitude lower than the rate of home timeline reads, and so in this case it’s preferable to do more work at write time and less at read time. But some users have over 30 million followers. This means that a single tweet may result in over 30 million writes to home timelines! Doing this in a timely manner—Twitter tries to deliver tweets to followers within five seconds—is a significant challenge.

Tweets from any celebrities that a user may follow are fetched separately and merged with that user’s home timeline when it is read. This hybrid approach is able to deliver consistently good performance.

Measuring Performance: response time percentiles

- When you increase a load parameter and keep the system resources (CPU, memory, network bandwidth, etc.) unchanged, how is the performance of your system affected?

- When you increase a load parameter, how much do you need to increase the resources if you want to keep performance unchanged?

Both questions require performance numbers.

In a batch processing system such as Hadoop, we usually care about throughput—the number of records we can process per second, or the total time it takes to run a job on a dataset of a certain size. In online systems, what’s usually more important is the service’s response time.

Latency and response time are often used synonymously, but they are not the same. The response time is what the client sees: besides the actual time to process the request (the service time), it includes network delays and queueing delays. Latency is the duration that a request is waiting to be handled—during which it is latent, awaiting service.

We therefore need to think of response time not as a single number, but as a distribution of values that you can measure. The mean is not a very good metric if you want to know your “typical” response time, because it doesn’t tell you how many users actually experienced that delay.

If your median response time is 200ms, that means half your requests return in less than 200ms, and half your requests take longer than that. The median is also known as the 50th percentile, and sometimes abbreviated as p50. If the 95th percentile response time is 1.5 seconds, that means 95 out of 100 requests take less than 1.5 seconds.

Amazon describes response time requirements for internal services in terms of the 99.9th percentile, even though it only affects 1 in 1,000 requests. This is because the customers with the slowest requests are often those who have the most data on their accounts because they have made many purchases—that is, they’re the most valuable customers!

You may want to keep a rolling window of response times of requests in the last 10 minutes.

Bad effects

As a server can only process a small number of things in parallel (limited, for example, by its number of CPU cores), it only takes a small number of slow requests to hold up the processing of subsequent requests—an effect sometimes known as head-of-line blocking. Therefore, it is important to measure response times on the client side.

Even if only a small percentage of backend calls are slow, the chance of getting a slow call increases if an end-user request requires multiple backend calls, and so a higher proportion of end-user requests end up being slow (an effect known as tail latency amplification).

2. Data Models and Query Languages

The best-known data model today, proposed by Edward Codd in 1970, is data organized into relations (tables in SQL), where each relation is an unordered collection of tuples (rows in SQL). The typical use cases for mainframes in the 1960s and 1970s were transaction processing (sales transactions, stock-keeping in warehouses) and batch processing (customer invoicing, payroll).

Decades later, new use cases and changes in scale brought new solutions to the space.

The Birth of NoSQL

NoSQL is the latest attempt to overthrow the relational model’s dominance. The name “NoSQL” is unfortunate, since it doesn’t actually refer to any particular technology—it was originally intended simply as a catchy Twitter hashtag for a meetup on open source, distributed, non-relational databases in 2009.

There are several driving forces behind the adoption of NoSQL databases, including:

- A need for greater scalability: very large datasets or write throughput

- A preference for free and open source over commercial products

- Specialized query operations that are not well supported by the relational model

- Frustration with the restrictiveness of relational schemas

The Object-Relational Impedance Mismatch

Picture the database model of a profile on LinkedIn. It may have multiple one-to-many relations: one user can have many positions, many education entries and many contact info options. This translates into a separate table for each relation, and into multiple joins when constructing the entire profile.

A JSON representation of this profile has better locality than this multi-table schema. If you want to fetch a profile in the relational example, you need to either perform multiple queries or perform a messy multi-way join between the users table and its subordinate tables. In the JSON representation, all the relevant information is in one place, and one query is sufficient.

ID or text? A question of duplication

When you use an ID, the information that is meaningful to humans (millions of users can share the same education entry) is stored in only one place, and everything that refers to it uses an ID (which only has meaning within the database). When you store the text directly, you are duplicating the human-meaningful information in every record that uses it. Anything that is meaningful to humans may need to change sometime in the future—and if that information is duplicated, all the redundant copies need to be updated. That incurs write overheads, and risks inconsistencies. Removing such duplication is the key idea behind normalization in databases.

Unfortunately, normalizing this data requires many-to-one relationships (many people live in one particular region, many people work in one particular industry), which don’t fit nicely into the document model. In relational databases, it’s normal to refer to rows in other tables by ID, because joins are easy. In document databases, joins are not needed for one-to-many tree structures, and support for joins is often weak.

Moreover, even if the initial version of an application fits well in a join-free document model, data has a tendency of becoming more interconnected as features are added to applications.

What to choose? It depends...

The main arguments in favor of the document data model are schema flexibility, better performance due to locality, and that for some applications it is closer to the data structures used by the application. The relational model counters by providing better support for joins, and many-to-one and many-to-many relationships.

If your application does use many-to-many relationships, the document model becomes less appealing. It’s possible to reduce the need for joins by denormalizing, but then the application code needs to do additional work to keep the denormalized data consistent.

Schemaless?

No schema means that arbitrary keys and values can be added to a document, and when reading, clients have no guarantees as to what fields the documents may contain.

Document databases are sometimes called schemaless, but that’s misleading, as the code that reads the data usually assumes some kind of structure. A more accurate term is schema-on-read (the structure of the data is implicit, and only interpreted when the data is read), in contrast with schema-on-write (the traditional approach of relational databases, where the schema is explicit and the database ensures all written data conforms to it).

Schema-on-read is similar to dynamic (runtime) type checking in programming languages, whereas schema-on-write is similar to static (compile-time) type checking.

On the one hand, changes to the the structure of data in a document database have to be added to application code to handle the old and new documents. On the other hand, in a “statically typed” database schema, you would typically perform a migration, which often requires downtime and is slow. In cases where all records are expected to have the same structure, schemas are a useful mechanism for documenting and enforcing that structure. Otherwise, the schema-on-read approach is more advantageous.

Storage Locality

A document is usually stored as a single continuous string, encoded as JSON for example. If your application often needs to access the entire document, there is a performance advantage to this storage locality. This advantage only applies if you need large parts of the document at the same time. The database typically needs to load the entire document, even if you access only a small portion of it, which can be wasteful on large documents.

Graph Stuff

What if many-to-many relationships are very common in your data? The relational model can handle simple cases of many-to-many relationships, but as the connections within your data become more complex, it becomes more natural to start modeling your data as a graph.

Social graphs Vertices are people, and edges indicate which people know each other.

The web graph Vertices are web pages, and edges indicate HTML links to other pages.

Graphs are also good for evolvability: as you add features to your application, a graph can easily be extended to accommodate changes in your application’s data structures.

Graph Queries

In a relational database, you usually know in advance which joins you need in your query. In a graph query, you may need to traverse a variable number of edges before you find the vertex you’re looking for—that is, the number of joins is not fixed in advance.

If the same query can be written in 4 lines in one query language but requires 29 lines in another, that just shows that different data models are designed to satisfy different use cases. It’s important to pick a data model that is suitable for your application.

3. Storage and Retrieval

In order to tune a storage engine to perform well on your kind of workload, you need to have a rough idea of what the storage engine is doing under the hood. There is a big difference between storage engines that are optimized for transactional workloads and those optimized for analytics.

An Important Trade-Off: writes

Well-chosen indexes speed up read queries, but every index slows down writes. You can then choose the indexes that give your application the greatest benefit, without introducing more overhead than necessary (i.e. indexes must fit in memory).

Some indexes, like log-structured indexes, rewrite data multiple times due to their repeated compaction and merging nature. This effect—one write to the database resulting in multiple writes to the disk—is known as write amplification, and has a direct performance cost.

Another type of indexes, B-trees, are very ingrained in the architecture of databases and provide consistently good performance for many workloads. In new datastores, log-structured indexes are becoming increasingly popular. There is no quick and easy rule for determining which type of storage engine is better for your use case, so it is worth testing empirically.

Another Trade-Off: clustered indexes

A compromise between a clustered index (storing all row data within the index) and a non-clustered index (storing only references to the data within the index) is known as a covering index or index with included columns.

As with any kind of duplication of data, clustered and covering indexes can speed up reads, but they require additional storage and can add overhead on writes. Databases also need to go to additional effort to enforce transactional guarantees, because applications should not see inconsistencies due to the duplication.

Multi-column indexes

In a database of weather observations you could have a two-dimensional index (on date, temperature) in order to efficiently search for all the observations during the year 2013 where the temperature was between 25 and 30C. With a one-dimensional index, you would have to either scan over all the records from 2013 (regardless of temperature) and then filter them by temperature, or vice versa. A 2D index could narrow down by timestamp and temperature simultaneously!

A question of cost and encoding

As RAM becomes cheaper, the cost-per-gigabyte argument in favor of disks is eroded. Many datasets are simply not that big, so it’s quite feasible to keep them entirely in memory, potentially distributed across several machines. This has led to the development of in-memory databases.

Some in-memory key-value stores, such as Memcached, are intended for caching use only, where it’s acceptable for data to be lost if a machine is restarted. But other in-memory databases aim for durability, which can be achieved with special hardware (such as battery-powered RAM), by writing a log of changes to disk or snapshots to disk.

Counterintuitively, the performance advantage of in-memory databases is not due to the fact that they don’t need to read from disk. Even a disk-based storage engine may never need to read from disk if you have enough memory, because the operating system caches recently used disk blocks in memory anyway. Rather, they can be faster because they can avoid the overheads of encoding in-memory data structures in a form that can be written to disk.

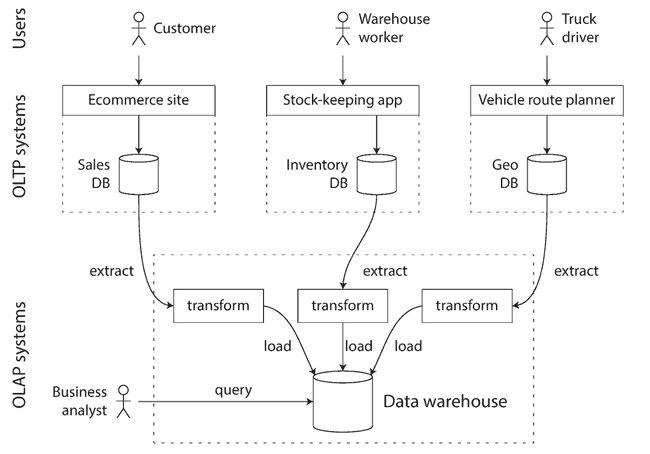

Transaction processing vs Analytic systems

A transaction needn’t necessarily have ACID properties. Transaction processing just means allowing clients to make low-latency reads and writes—as opposed to batch processing jobs, which only run periodically.

| Property | Transaction processing systems (OLTP) | Analytic systems (OLAP) |

|---|---|---|

| Main read pattern | Small number of records per query, fetched by key | Aggregate over large number of records |

| Main write pattern | Random-access, low-latency writes from user input | Bulk import (ETL) or event stream |

| Primarily used by | End user/customer, via web app | Internal analyst, for decision support |

| What data represents | Latest state of data (current point in time) | History of events that happened over time |

| Dataset size | Gigabytes to terabytes | Terabytes to petabytes |

In the early 1990s, there was a trend for companies to stop using their OLTP systems for analytics purposes, and to run the analytics on a separate database instead. This separate database was called a data warehouse.

Data Warehousing

OLTP systems (i.e. checkout, inventory, etc) are expected to be highly available and to process transactions with low latency, since they are critical for business. It's a bad idea to let business analysts run ad hoc analytic queries on an OLTP database, since those queries are often expensive, which can harm the performance of concurrently executing transactions.

A data warehouse, by contrast, is a separate database that analysts can query without affecting OLTP operations. It contains read-only copy of the data in all the various OLTP systems in the company.

Even though data warehouse and OLTP databases share an SQL interface, they differ in their query optimizations, leading to specialization for transaction processing or analytics workloads by most vendors (but not both).

Separate table of dates

Date and time are often represented using dimension tables (a subset of fact tables), because this allows additional information about dates (such as public holidays) to be encoded, allowing queries to differentiate between sales on holidays and non-holidays.

Columnar Storage

When your queries require sequentially scanning across a large number of rows, it becomes important to encode data very compactly, to minimize the amount of data that the query needs to read from disk.

The idea behind column-oriented storage is simple: don’t store all the values from one row together, but store all the values from each column together instead. If each column is stored in a separate file, a query only needs to read and parse those columns that are used in that query, which can save a lot of work.

Column storage is easiest to understand in a relational data model, but it applies equally to non-relational data. For example, Parquet is a columnar storage format that supports a document data model, based on Google’s Dremel.

Data^Data^Data: Data Cubes

A common special case of a materialized view is known as a data cube or OLAP cube.

A materialized view is an actual copy of query results written to disk, unlike a virtual view, which is a shortcut for writing queries, as the SQL engine expands it into the underlying query on-the-fly when read.

The advantage of a materialized data cube is that certain queries become very fast because they have effectively been precomputed. For example, if you want to know the total sales per store yesterday, you just need to look at the totals along the appropriate dimension—no need to scan millions of rows.

The disadvantage is that a data cube doesn’t have the same flexibility as querying the raw data. For example, there is no way of calculating which proportion of sales comes from items that cost more than $100, because the price isn’t one of the dimensions. Most data warehouses therefore try to keep as much raw data as possible, and use aggregates such as data cubes only as a performance boost for certain queries.

4. Encoding and Evolvability

Scenarios in which data encodings are important

- Databases, where the process writing encodes the data and the process reading decodes it

- RPC and REST APIs, where the client encodes a request, the server decodes it and encodes a response, and the client finally decodes the response

- Asynchronous message passing (using message brokers), where nodes communicate by sending each other messages that are encoded by the sender and decoded by the recipient

A bit of care

Relational databases generally assume that all data in the database conforms to one schema at any one point in time. By contrast, schema-on-read (“schemaless”) databases don’t enforce a schema, so the database can contain a mixture of older and newer data formats written at different times.

- With server-side applications you may want to perform a rolling upgrade of nodes. This allows new versions to be deployed without service downtime, and thus encourages more frequent releases and better evolvability.

- With client-side applications you’re at the mercy of the user, who may not install the update for some time!

Backward compatibility Newer code can read data that was written by older code.

Forward compatibility Older code can read data that was written by newer code.

The JSON returned by Twitter’s API includes tweet IDs twice, once as a JSON number and once as a decimal string, to work around the fact that the numbers are not correctly parsed by JavaScript applications

Formats for Encoding Data

Programs usually work with data in two different representations:

- In memory, data is kept in objects, structs, lists, arrays, hash tables, trees, and so on. These data structures are optimized for efficient access and manipulation by the CPU (typically using pointers).

- When you want to write data to a file or send it over the network, you have to encode it as some kind of self-contained sequence of bytes (for example, a JSON document). Since a pointer wouldn’t make sense to any other process, this sequence-of-bytes representation looks quite different from the data structures that are normally used in memory.

Don't use your language's built-in encoding

- If an attacker can get your application to decode an arbitrary byte sequence, they can instantiate arbitrary classes.

- Efficiency (CPU time taken to encode or decode, and the size of the encoded structure) is also often an afterthought. For example, Java’s built-in serialization is notorious for its bad performance and bloated encoding.

Comments

Thank you for your comment! Under review for moderation.